“Shadow banning” has caused discussion and debate in a social media world that is always changing. This is when a user’s access to content is limited without their knowledge, making it harder for them to participate while giving the impression that everything is fine. This blog post looks into the mysterious phenomenon of “shadow bans” and specifically investigates whether Facebook employs this strategy to control the flow of information and the links between users on its platform. Does Facebook shadow ban its users? Come with us to find out the truth about shadow bans and their potential impact on your social media experience.

Understanding Shadow Banning and Its Impact

Shadow banning, also called stealth banning or ghost banning, is a way for websites to hide a user’s content or activities without them knowing. The goal is to stop bad behavior without letting the person know what they can’t do. The term was first used on online boards and community sites in the early days of the internet. Since then, it has spread to other online places like social media, blogs, and websites. Back in the 1980s, managers on Usenet would hide posts from users who were being too loud.

Examples of Shadow Banning on Other Platforms

- Twitter: Twitter has been accused of “shadow banning” people by making their tweets less visible in search results and follower lists. In 2018, Republicans said the company was hiding their content, which caused a lot of trouble. Twitter rejected the claims and said that they were just following their own rules to make the site better.

- Reddit: Shadow banning is a common way to deal with spam, trolls, and rude users on Reddit. Shadow-banned users can still see and use the platform, but their posts and comments are hidden from the rest of the community. The goal is to keep these users from knowing that they have been banned, so they don’t just make new accounts to keep acting in a bad way.

- Instagram: Instagram has been known to use shadow banning to hide material that doesn’t follow their rules or is considered spam. When users are shadow-banned, their posts and hashtags might not show up in searches or feeds, which could make them less active. Instagram hasn’t said for sure that it uses shadow bans, but it has said that it uses algorithms to get rid of low-quality content and interactions.

- Facebook: People have also said that Facebook shadow bans, especially when it comes to political material. Users have said that their posts have been deleted or hidden, making them less likely to show up in news feeds and search results. Facebook says it doesn’t ban people based on their political views, but it does use algorithms to rank content based on how much people interact with it and how good they think it is.

Potential Reasons for Shadow Banning

- Control spam: Shadow banning is a tool that is used to hide spam content, making sure that the platform stays useful and easy to use. This helps keep the platform’s general quality high and keeps spam from taking over.

- Enforce community guidelines: Platforms can use shadow banning to enforce their community guidelines and get rid of material that doesn’t follow these rules. This can include saying things that are hurtful or mean, harassing someone, or doing anything else that is bad or hurtful.

- Stop trolls and toxic behavior: Trolls and users who act in a toxic way can cause problems for the community and make people less likely to join in talks. Shadow banning these people can help keep the setting for all users positive and welcoming.

- Minimize the spread of false information: With the rise of fake news and campaigns to spread false information, platforms may use shadow banning to limit the spread of false or misleading content, protecting their users and keeping their reputation.

- Optimize user experience: Social media sites often use algorithms to pick material based on what users like and how much they interact with it. Shadow banning can be a part of these algorithms to make sure that users don’t see low-quality or irrelevant content, which makes their experience on the site better.

Facebook’s Content Moderation Policies

Facebook has made a set of rules for the community that say what kinds of information are allowed on the site. The goal of these rules is to encourage people to act in a polite and responsible way, keep people safe and healthy, and keep a sense of community. Some of the most important things that Facebook’s community standards cover are:

Sexual Activity and Nakedness: Facebook doesn’t let you share explicit sexual content or nakedness, except in a few cases, like when you’re sharing art or teaching content.

Violence and Graphic Content: You can’t share violent or graphic content on Facebook. This includes content that celebrates or supports violence.

Hate Speech: Hate speech is not allowed on Facebook. Hate speech is described as speech that attacks or dehumanizes people or groups based on their identity, such as race, ethnicity, national origin, religious affiliation, sexual orientation, gender identity, or disabilities.

Bullying and Harassment: Bullying and harassment are not allowed on Facebook. This includes material that scares, threatens, or bothers other people.

Misinformation: Facebook wants to stop the spread of false information and confusing content and has put in place a number of rules and tools to do so.

Explanation of the Content Review Process

Facebook uses both user reports and automated systems to find material that might be a problem in order to enforce these community standards. When a piece of content is reported, a team of trained content reviewers looks at it and decides if it meets Facebook’s community standards. Usually, the following steps make up the material review process:

- Triage: The information that has been reported is triaged to see how important it is and if it needs more review.

- First Review: A trained content moderator looks at the material and decides if it meets Facebook’s community standards.

- Escalation: The material is sent to a higher level of review if it is thought to go against Facebook’s community standards.

- Final Review: A senior content moderator looks at the material that has been escalated and makes a final decision about whether or not it breaks Facebook’s community standards.

- Enforcement: If the content is found to violate Facebook’s community standards, it may be taken down or hidden, and the person who posted it may be punished (for example, by getting a warning or having their account closed).

Role of AI in Content Moderation

Facebook uses AI to find and rank possibly harmful content, and it also uses image and video recognition algorithms to automatically flag content that might be sexual or violent. The company is also putting money into tools that use AI to find and get rid of hate speech. The end goal is to make the platform safer and more open to everyone.

Does Facebook Shadow Ban Its Users?

“Shadow banning” on Facebook refers to a situation where a user’s content is not seen by their followers despite their account being active. This results in reduced reach and fewer interactions on posts. Some users claim to have experienced this phenomenon.

Independent Research and Findings

Studies have found evidence of “shadow banning,” which is when Facebook’s algorithm hides certain material and makes it harder for users to be seen. One NYU study found that people were less interested in right-wing pages than left-wing pages. In another study, Media Matters found that even though conservative material got a lot of attention, it was less likely to be included in trending news.

Analysis of Facebook’s Algorithm and its Impact on Content Visibility

Facebook uses an algorithm to decide what shows up in users’ newsfeeds. The algorithm looks at things like the type of content, how active the user is, and how engaged they are with the content. It gives more weight to interesting content and less weight to low-quality/spam content. Since no one knows how it works, Facebook has been criticized for not being clear. The algorithm can hide certain content and limit how visible a user is. For example, if a user’s content is consistently low quality or spam, the algorithm can lower that person’s ranking.

A Step-by-Step Guide to Removing the Facebook Shadowban

With UnbanMeNow, it’s easy to fix the Facebook shadowban. Here’s a step-by-step explanation of how to do it:

Select the Facebook Platform: The first step is to go to the UnbanMeNow website and pick the platform you need help with. In this case, it is Facebook. Once you’ve picked Facebook, click the “Unban Me Now!” button to start the process.

Provide Your Basic Information: You will be asked to provide some basic information, like your full legal name, email address, and platform username, which are all linked to your account. This information will help UnbanMeNow find your account and figure out what’s going on. Click “next” to keep going.

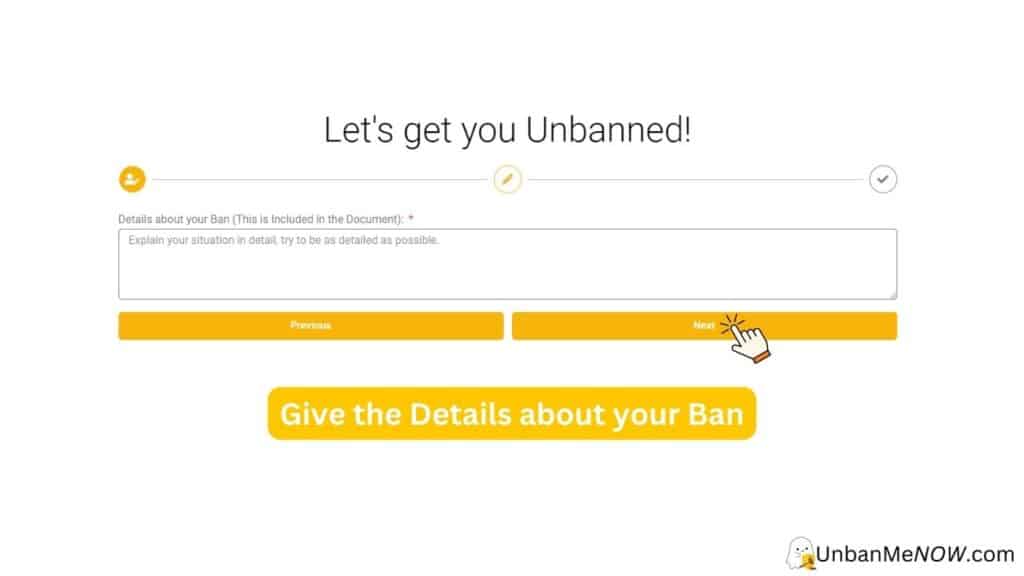

Give More Details: The next step is to explain your situation in more detail. Tell as much as you can about what happened that got you shadowbanned. Tell what you did to fix the problem and why you think you were shadowbanned.

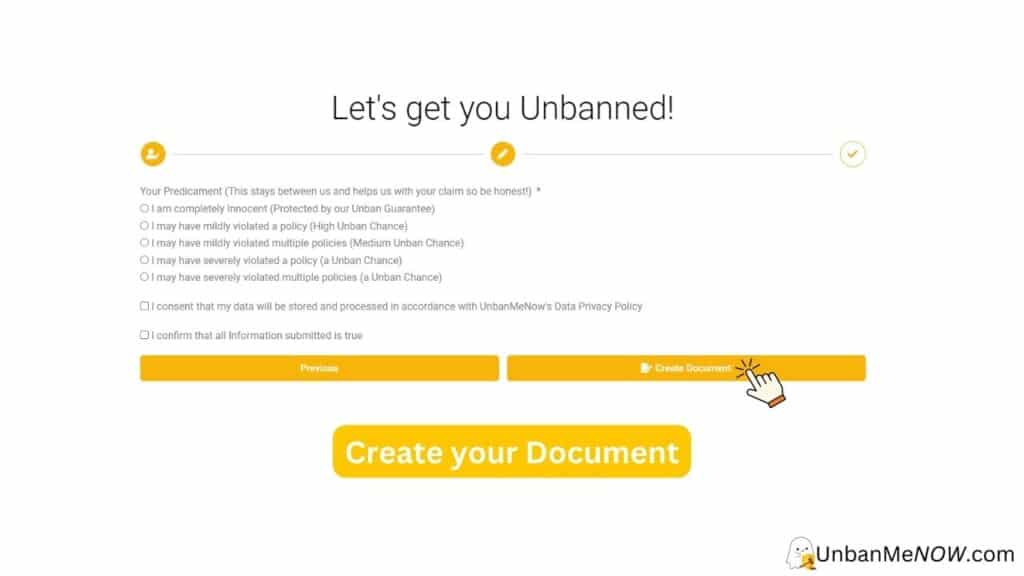

Choose Your Problem: UnbanMeNow lets you pick from a number of different problems. Choose the one that will work best for you. With this information, UnbanMeNow will be able to change the paper to fit your needs. After you choose your case, you will be asked to check the boxes to say that your information can be used. This lets UnbanMeNow keep and use your information in accordance with their Data Privacy Policy. You will also be asked to confirm that all the information you gave is correct. After you agree to the Data Privacy Policy, click the “Create Document” button. UnbanMeNow will make a document about your case and why you shouldn’t be banned based on the information you give.

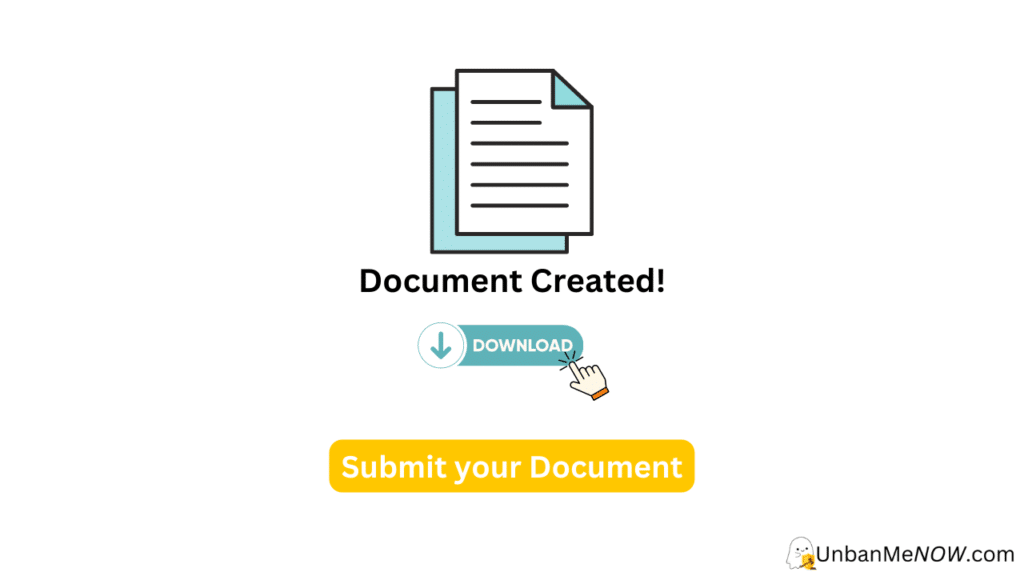

Remove from the Shadowban: The final step is to download the paper and send it in. To do this, click on the button that says “Download document.” After you have sent in the paper, all you can do is wait. UnbanMeNow says that once you send in the paper, your shadowban will be lifted in a few hours.

Final Thoughts

There has been a lot of talk about “shadow banning” on Facebook, and many people aren’t sure if it’s true or not. Even though the platform has always said that shadow banning doesn’t happen, many users and content creators have mentioned things that seem to show otherwise. Facebook’s lack of openness and clear communication has led to more doubts and made it harder for people to agree on what’s going on.

People Also Asked

Our users are getting more and more worried about a practice called “shadow banning,” which is when certain material or users are hidden without their knowledge. We have put together a list of questions from our users about shadow blocking on Facebook to help address these worries. Read on to find out more about this controversial practice and what it could mean for the future of online material.

What types of content or users are typically targeted for shadow banning on Facebook?

Facebook usually goes after people or things that break its rules for the community, like trash, hate speech, harassment, or false information. Shadow banning is a way to hide such content from other users without actually removing it. This makes it less likely that other users will see it.

How can I tell if my Facebook account has been shadow banned?

To find out if your Facebook account has been shadow banned, you can post something and see if it shows up in the news feeds of your friends or followers. If your posts don’t show up or only show up for a small number of people, it could be because your account has been shadow banned.

Is there any way to prevent or reverse a shadow ban on Facebook?

To stop or get rid of a shadow ban on Facebook, you should read and follow Facebook’s community standards and make sure that your posts and actions don’t break them. If you think that your Facebook account was shadow banned by mistake, you can contact Facebook’s support team to try to change the decision. You can also try to improve the quality of your posts and avoid doing things that could get you shadow-banned, like sharing a lot of spammy content.